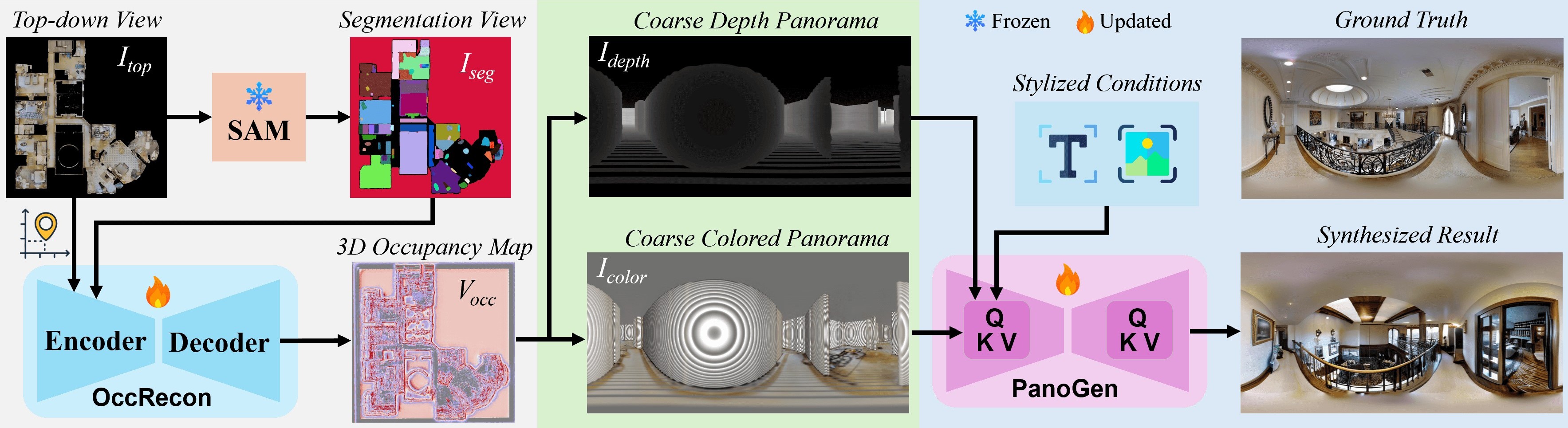

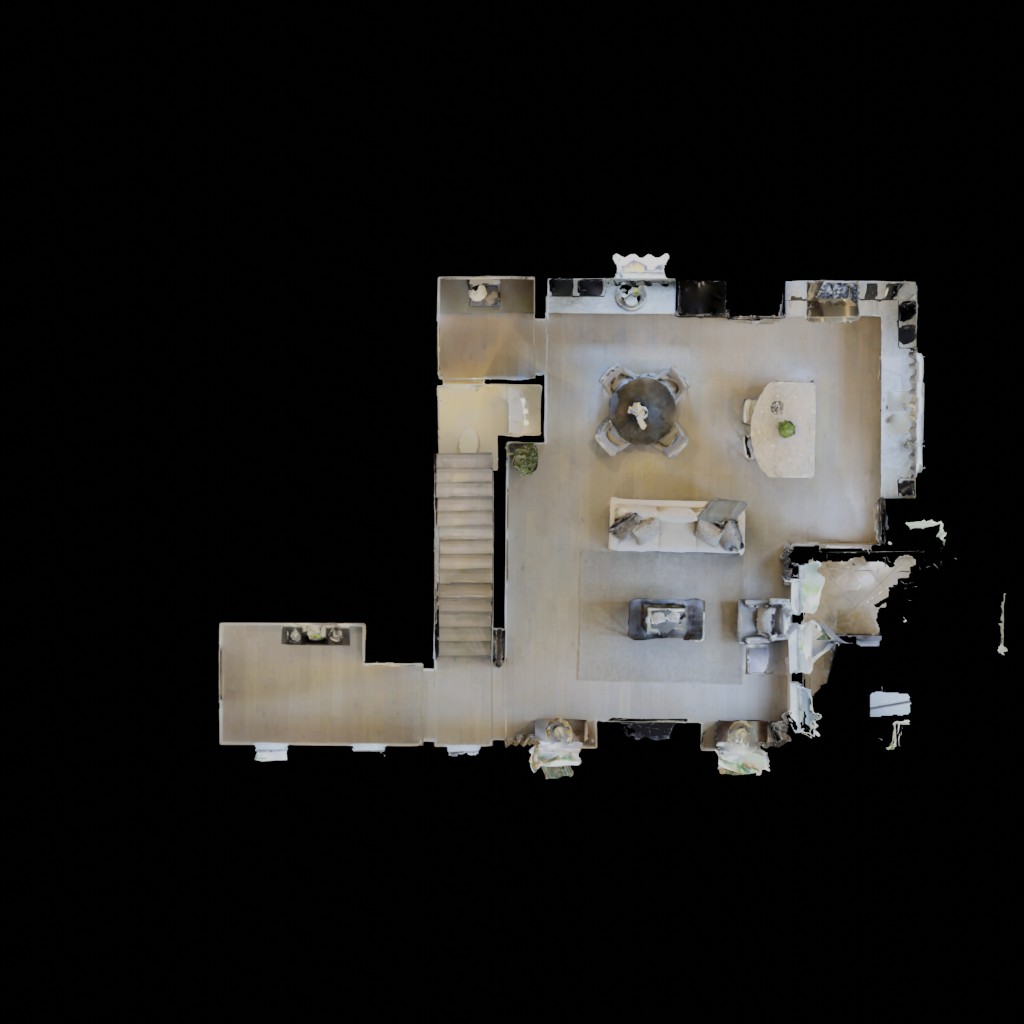

Generating immersive 360° indoor panoramas from 2D top-down views has applications in virtual reality, interior design, real estate, and robotics. This task is challenging due to the lack of explicit 3D structure and the need for geometric consistency and photorealism. We propose Top2Pano, an end-to-end model for synthesizing realistic indoor panoramas from top-down views. Our method estimates volumetric occupancy to infer 3D structures, then uses volumetric rendering to generate coarse color and depth panoramas. These guide a diffusion-based refinement stage using ControlNet, enhancing realism and structural fidelity. Evaluations on two datasets show Top2Pano outperforms baselines, effectively reconstructing geometry, occlusions, and spatial arrangements. It also generalizes well, producing high-quality panoramas from schematic floorplans. Our results highlight Top2Pano's potential in bridging top-down views with immersive indoor synthesis.

Given a top-down view and camera position, we first estimate 3D structure through volumetric occupancy prediction, then render coarse panoramas that guide a diffusion-based refinement process for high-quality output.

@inproceedings{zhang2025top2pano,

title = {Top2Pano: Learning to Generate Indoor Panoramas from Top-Down View},

author = {Zitong Zhang and Suranjan Gautam and Rui Yu},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

year = {2025}

}